In today’s data-driven enterprises, as organizations adopt scalable platforms like Databricks, the need for real-time data observability and maintaining reliable data pipelines becomes mission-critical.

Soda is a modern data quality platform purpose-built for this challenge. It offers automated data quality checks through both a no-code UI and programmatic integration, making it easy for the entire data team to monitor and improve data reliability.

With Soda's proven end-to-end testing, comprehensive data observability (such as metrics monitoring and anomaly detection), and a carefully designed issue resolution workflow, teams can detect issues early, get alerted instantly, and prevent bad data from cascading downstream.

Whether you’re a data engineer embedding checks in a Databricks notebook or a business user defining rules in Soda Cloud, everyone contributes without needing to write code.

This article walks you through how to integrate Soda with Databricks, highlighting the practical benefits for data teams aiming to build trust in their data pipelines.

Integration Overview

Soda supports two main integration paths with Databricks:

- Option 1: Soda with Databricks SQL Warehouse

- Option 2: Soda with PySpark using the

soda-spark-dfpackage

Both methods enable technical and non-technical users to define and run data quality checks across Delta Lake tables or Spark DataFrames, providing flexibility based on user preferences and skill sets.

Option 1: Using Soda with Databricks SQL Warehouse

This approach is ideal for teams working in SQL-centric environments. It supports both:

- Soda Agent (UI-based DQ monitoring)

- Soda Library (CLI-based pipeline workflows)

Both tools connect directly to a Databricks SQL Warehouse using simple connection parameters.

With Soda Agent (No Code)

The Soda Agent is a tool that enables Soda Web UI users to securely connect to data sources and perform automated data quality scans.

Configure the connection to Databricks via the Soda Cloud UI to automatically trigger the Soda Agent service. This allows teams to collaborate and continuously monitor data quality directly from the web interface.

Follow these steps to connect Databricks in Soda Cloud:

Step 1: In the Soda Cloud, go to the top-right corner:

Click your profile icon → select 'Data Sources' → add 'New Data Source' → configure 'Attributes' → choose 'Databricks Usage Monitor'

Step 2: On the 'Databricks Usage Monitor' page, configure your Databricks connection settings.

Step 3: Click 'Test Connection' to verify successful integration between Databricks and Soda Cloud.

Once connected, you can:

- Add built-in data quality checks to specific data sources

- Schedule automated scans

- Enable real-time anomaly detection

- Receive timely DQ alerts

- Resolve issues collaboratively through the UI

With Soda Library (Programmatic)

Soda Library is a Python package for running on-demand data quality scans to run on-demand data quality scans — perfect for CI/CD pipelines and quick local validations. It allows users to define data quality checks programmatically and send the results to Soda Cloud.

You can use Soda Library to integrate Soda into Databricks by following these steps:

Step 1: Install Soda Library and install soda-spark-df package.

pip install -i https://pypi.cloud.soda.io soda-spark[databricks]

For more details, refer to this step-by-step guide: Connect to Spark for Databricks SQL

Step 2: Prepare two YAML files to let Soda connect Databricks and run your first DQ scan.

config.yml: stores connection details for your data sources and Soda Cloud account.

1data_source my_datasource_name:

2 type: spark

3 method: databricks

4 catalog: samples

5 schema: nyctaxi

6 host: hostname_from_Databricks_SQL_settings

7 http_path: http_path_from_Databricks_SQL_settings

8 token: my_access_token

9soda_cloud:

10 host: cloud.soda.io

11 api_key_id: ${API_KEY}

12 api_key_secret: ${API_SECRET}

13checks.yml: stores user-defined checks for routine data validation.

14# Checks for basic validations

15checks for YOUR_TABLE:

16 - row_count between 10 and 1000

17 - missing_count(column_name) = 0

18 - invalid_count(column_name) = 0:

19 valid min: 1

20 valid max: 6

21 - duplicate_count(column_name) = 0

Step 3: Test Connection

To confirm that you have correctly configured the connection details in your configuration YAML file, use the test-connection command. If you wish, add an -V option to the command to return results in verbose mode in the CLI.

soda test-connection -d my_datasource -c config.yml -V

Once configured, users can begin monitoring anomalies and start creating user-defined checks, enabling easy validation of data against predefined rules.

Option 2: Using Soda with PySpark in Databricks Notebooks

For PySpark users, soda-spark-df library brings powerful data quality checks right into your notebook workflows. This makes it easy to embed data observability into ETL or ML pipelines, without leaving the Databricks ecosystem.

You can get started immediately using this example notebook. Just define the target table, plug in your API keys from Soda Cloud, and you're ready to go.

You can install this package in two ways:

Notebook Installation:

pip install -i https://pypi.cloud.soda.io soda-spark-df

Or at the cluster level:

Ideal for production jobs or collaborative environments. This allows all notebooks within the cluster to access Soda without individual setup. You can install soda-spark-df directly in your Databricks cluster.

Once installed, you can start using Soda by importing and configuring the Scan object:

from soda.scan import Scan

# US Regions use: cloud.us.soda.io and EU Regions use: cloud.soda.io

host = "cloud.soda.io"

api_key_id = dbutils.secrets.get(scope = "SodaCloud", key = "keyid")

api_key_secret = dbutils.secrets.get(scope = "SodaCloud", key = "keysecret")

# Fully qualified table name to run DQ checks on

fq_table_name = "unity_catalog.quickstart_schema.customers_daily"

# This will be the name of the Data Source that appears in Soda Cloud.

# If also using an agent, ensure this value is identical

datasource_name = "databricksunitycatalogsql"This setup connects your notebook directly to Soda Cloud, enabling you to define checks in YAML or run them inline with code.

You can also run Soda checks on external sources like PostgreSQL — all from within a Databricks notebook.

Soda allows you to push checks to Postgres, so they run within the Postgres environment, without moving data into Databricks, saving on compute and data transfer costs.

If all the checks pass, you can then update an existing Delta table in Databricks.

To get started, install the Postgres connector:

pip install -i https://pypi.cloud.soda.io soda-postgres

Examples: Running Soda Checks in a Notebook

When using Soda in a Databricks notebook, you can automatically generate default checks with minimal configuration, such as missing values, duplicate rows, freshness, and schema changes.

For more advanced validations, such as data reconciliation or custom business logic, you can either use SodaCL in the notebook or configure rules via the Soda Cloud UI.

Here’s an example output of a successful scan

INFO | Scan summary:

INFO | 11/11 checks PASSED:

INFO | customer_updated in databricksunitycatalogsql

INFO | Total rows should not be 0 [PASSED]

INFO | Any changes in the schema [PASSED]

INFO | Duplicate Rows [PASSED]

INFO | No Missing values in ID [PASSED]

INFO | No Missing values in EMAIL [PASSED]

INFO | Freshness for the column CREATED_AT [PASSED]

INFO | All is good. No failures. No warnings. No errors.Unity Catalog:

Monitoring Data Quality on Soda Cloud UI

After integration, Soda can interact with Databricks via the Soda Cloud UI, allowing both technical and non-technical users to monitor key metrics and stay aligned on data health.

Mainly, you can monitor data health by monitoring pre-defined DQ metrics and automatic anomaly detection.

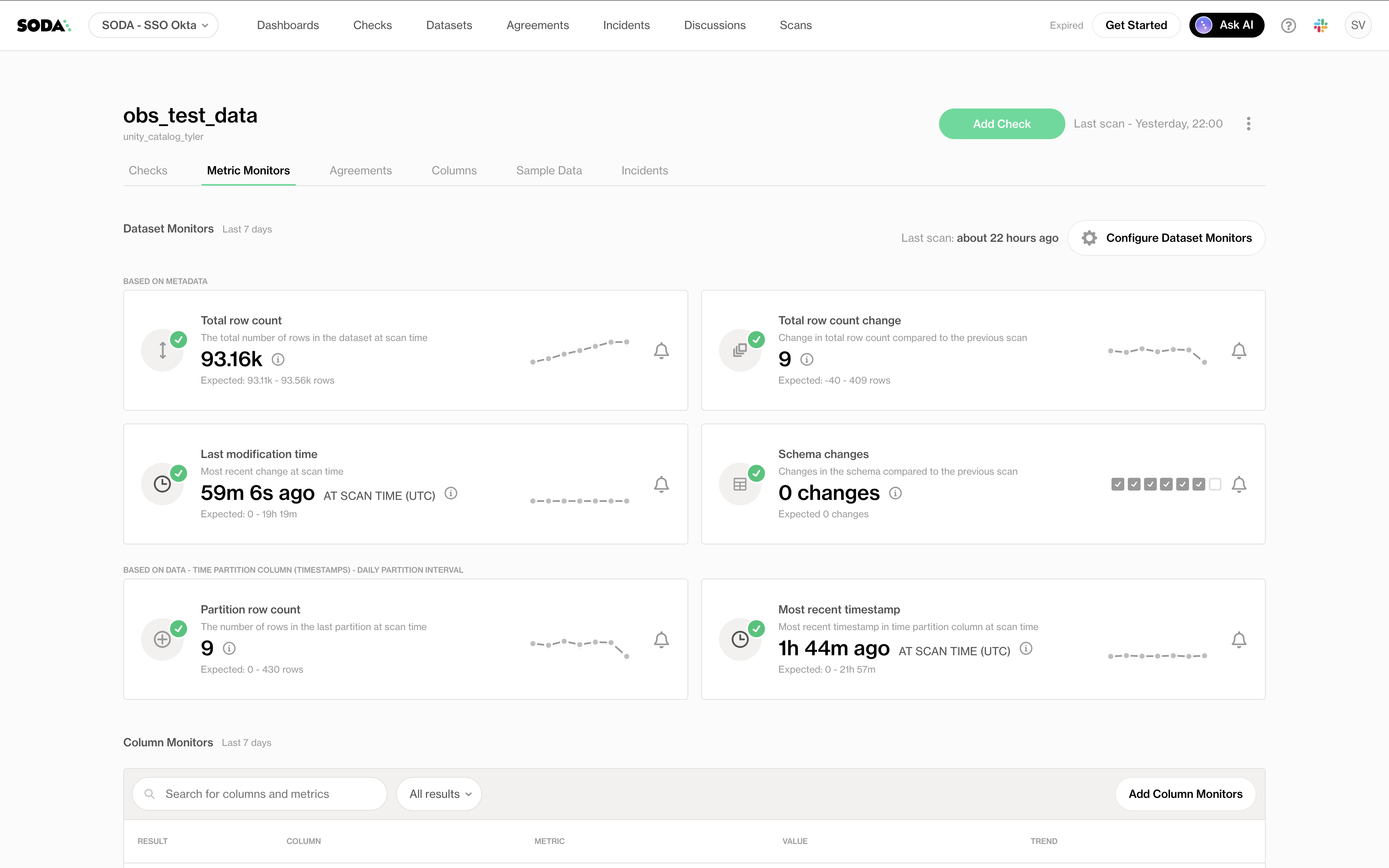

Metrics Monitoring

This dashboard gives a real-time summary of high-level dataset metrics over the past 7 days. Teams can monitor trends such as:

- Total row count and row count changes, with thresholds clearly defined and checked on every scan

- Schema changes, such as added or removed columns

- Last insertion time and most recent timestamps, which are crucial for freshness and timeliness tracking

- Missing values by column, shown at the bottom as part of column-level monitors

These metrics help teams identify issues early, such as unexpected data delays, structural changes, or shifts in volume, without diving into logs or code.

Anomaly Dashboard

The anomaly detection view allows you to monitor historical trends and detect data quality issues using statistical thresholds. Here, the partition row count is tracked over time, with anomalies clearly marked in red. This helps:

- Spot seasonality or volume spikes

- Detect silent data loss (e.g., partitions not populated)

- Tune alert sensitivity based on operational patterns

This dashboard is especially helpful when investigating unexpected behavior in pipelines, by giving teams the context they need to ask better questions or automate resolution.

Benefits of Integration

Instead of putting the burden of data quality on a single team, Soda makes it a collaborative process. Issues become visible, actionable, and resolvable faster.

This means:

- Engineers stay close to the pipelines.

- Business users stay aligned with domain logic.

- Everyone stays on the same page.

It also unlocks the following advantages:

- Scalability: Natively supports Spark and Delta Lake, enabling checks on large-scale datasets.

- Automation: Seamlessly integrates with Databricks Jobs and Workflows, aligning with CI/CD pipelines.

- Early Detection: Proactively flags schema drift, null spikes, and other anomalies before they escalate.

- Governance: Enhances Unity Catalog by adding data quality and observability across domains.

Conclusion

Integrating Soda with Databricks allows data teams to embed a robust data quality framework directly into their workflows. By combining Databricks' processing power with Soda’s Data Observability, organizations can confidently scale without sacrificing trust or reliability.

.png)

.png)